My entry into the data science field started with mathematics, as I feared coding. Then, when the maths magic kicked in, I started doing big machine-learning projects. An essential part of machine learning projects is EDA, which is your exploratory data analysis. In easy terms, you need to spend a lot of time, almost 70%, preparing the data, and there I did a lot of data mining stuff.

In data science, there is a saying, "garbage in, garbage out." So, you have to be completely sure of your data before diving into building a machine-learning model. I worked on many self-made datasets, as most data we get from free data vendors like Kaggle are pre-cleaned, so you don’t get a chance to learn data preparation or mining, but working on self-made datasets helped me gain expertise in mining. Later, I started writing on LinkedIn, contributing to the community, and engaging in informative discussions on data preparation topics. Which helped me get the top mining voice badge.

2. How do you tackle large dataset challenges?

Most tools are quite sufficient to handle datasets; they are pre-configured in such a way. If you get a dataset that is large and hard to handle, we call it big data, and then there are tools like PySpark I used to work around it. In easy terms, it’s a framework that looks like a combination of Python and SQL. Also, other ways are using cloud servers or running the process in parallel processing to handle the issue.

3. Can you explain your process for building AI assets?

Begin by identifying the problem. Every problem needs an AI solution, and managing an AI solution is difficult or cheap. Plus, you need more resources. If you think that you can make a solution that solves quite a big problem and is the best way out, then only build one. Gather data You need data for data science. Data science is the backbone of AI.

You need to have the appropriate sample of data that is the right representation of your population. And collecting it might be hard. Your options are buying data from the vendors, scraping the data, using publicly available data, or creating synthetic data. Clean and prepare the best version of your data. The data you will get doesn't need to be the data you will receive does not need to be correctly ordered and structured for your needs.new features, and scale the data. When working for a specific business, it's ideal to create insight to support your argument to use your data in a specific way. Use an algorithm. You can use many machine learning algorithms according to your need to build your machine learning models, or you can use already trained models that are available to you.

In recent times, most work is done on the back of LLM models, which are basically large language models, trained over all the data present over the internet. For example, a GPT model is trained with over a billion parameters, or you can say with data that has more than a billion columns and no idea how many rows, test and deploy. Once you train, the next step is to test if the model gives the expected results. If not, you might have to retrain, use a different model, tweak some parameters, etc. Once you are good with your testing, you will have to make your model accessible to the public via an app or website that comes under the bracket of deployment.

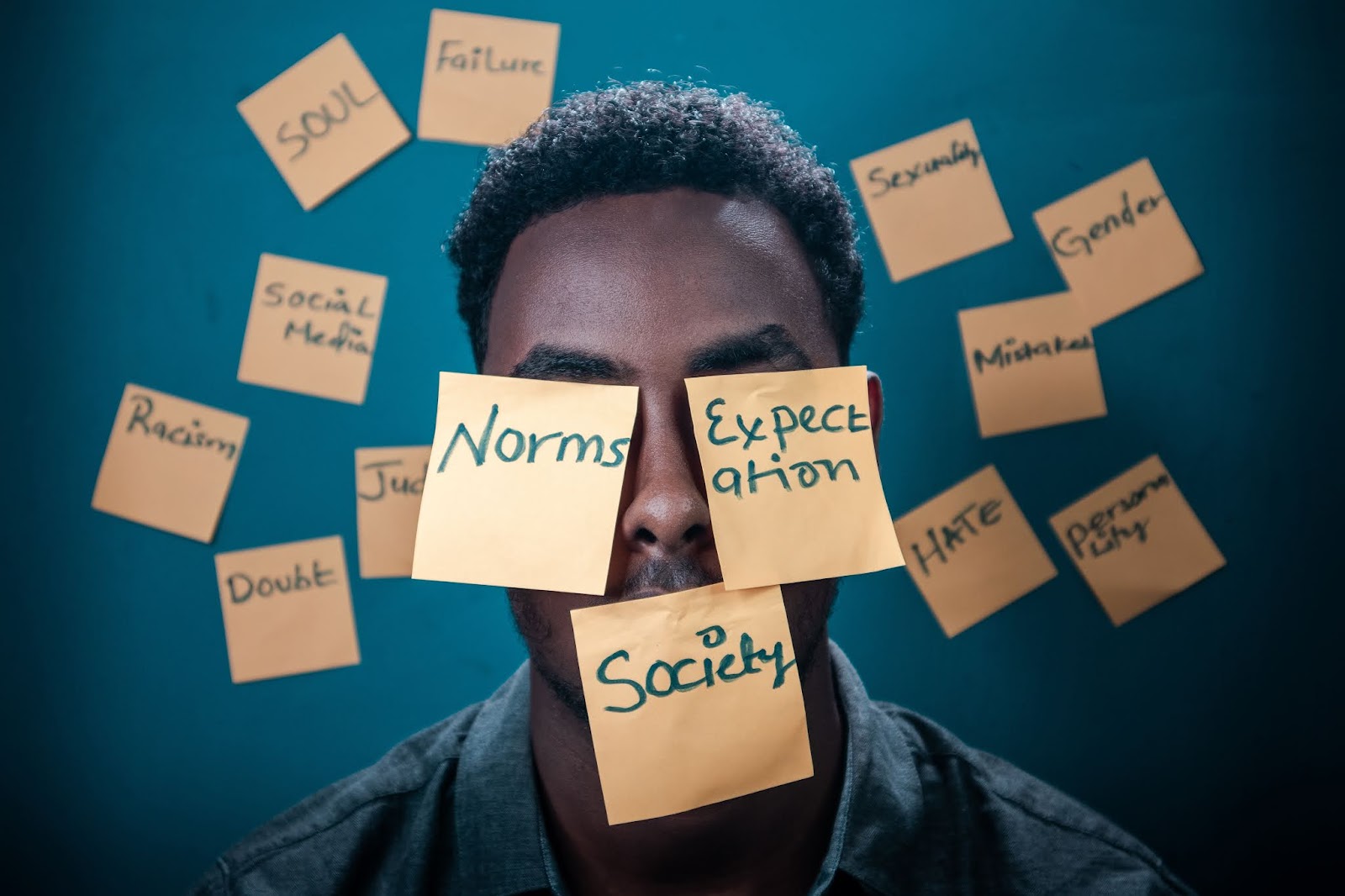

Ethical AI and responsible AI are two subjects that cover

this thing properly. We learn many bad norms and apply them to our daily routine, including discrimination based on race, gender, or ethnicity, which we don't want our models to learn. So, it all comes to the

data. While feeding the data to your model to train, make sure you give your

model the right data.

5. Can you share a successful collaboration story?

Worked for the energy sector to optimize the cost and see

how many new oil wells they should plan in the next financial year, taking into

account the current balance, their capacity for monthly logistics, and

financial part. Later, we used AI to read data out of the tables, making the

results accessible to the end-user who is a labor having no knowledge of AI or

machine learning, but can use the reports and data that is generated by our

tool.

6. What is your favorite book and why?

There are a couple of books that feature that data back - Outliers by Malcolm Gladwell–that explain up. Quite an interesting book relates to machine learning and the career world. Line 3.0 book consists of 10 stories about how can we grow our prosperity through

automation without leaving people lacking income or purpose? What career advice

should we give?

BIO:

Mr. Shubhankit Sirvaiya during his tenure, served clients from diverse industries such as telecom, automobile, and energy where he utilized his expertise in Data science to design and implement solutions for them, Over the years he gained hands-on experience in various areas of Data Science, including data analysis using advanced Excel techniques and Python libraries, supervised and unsupervised machine learning algorithms, data query languages, data mining techniques in Oracle SQL/PLSQL, visualization tools like PowerBI and interactive Dashboards, statistical analysis, and its significance in model building, Django framework for web applications, implementing ML solutions and data analysis on Google Cloud Platform.

Interviewed by: Mishika Goel

.jpeg)

Social